What I love about QGIS is that one is able to create a nice map quickly. The other day I was asked to make a situation map for the project we are working on to include it into presentation. Аll I had was a laptop with no relevant spatial data at all, but with QGIS installed (I even had no mouse to draw something). Though it was more than enough: I loaded OSM as a base layer and used annotation tool to add more sense to it. Voilà:

What is the cause for the degradation of environment?

Capitalism, corruption, consuming society? - OVERPOPULATION!

Please, save the Planet - kill yourself...

Showing posts with label GIS. Show all posts

Showing posts with label GIS. Show all posts

Thursday, June 18, 2015

Sunday, November 2, 2014

How to Create Delauney Triangulation Graph from a .shp-file Using NetworkX

For my own project I needed to create a graph based on a Delauney triangulation using NetworkX python library. And a special condition was that all the edges must be unique. Points for triangulation stored in a .shp-file.

I was lucky enough to find this tread on Delauney triangulation using NetworkX graphs. I made a nice function out of it for point NetworkX graphs processing. This function preserves nodes attributes (which are lost after triangulation) and calculates lengths of the edges. It can be further improved (and most likely will be) but even at the current state it is very handy.

Example of use:

Code for the function:

I was lucky enough to find this tread on Delauney triangulation using NetworkX graphs. I made a nice function out of it for point NetworkX graphs processing. This function preserves nodes attributes (which are lost after triangulation) and calculates lengths of the edges. It can be further improved (and most likely will be) but even at the current state it is very handy.

Example of use:

import networkx as nx import scipy.spatial import matplotlib.pyplot as plt path = '/directory/' f_path = path + 'filename.shp' G = nx.read_shp(f_path) GD = createTINgraph(G, show = True)

Code for the function:

import networkx as nx

import scipy.spatial

import matplotlib.pyplot as plt

def createTINgraph(point_graph, show = False, calculate_distance = True):

'''

Creates a graph based on Delaney triangulation

@param point_graph: either a graph made by read_shp() from another NetworkX's point graph

@param show: whether or not resulting graph should be shown, boolean

@param calculate_distance: whether length of edges should be calculated

@return - a graph made from a Delauney triangulation

@Copyright notice: this code is an improved (by Yury V. Ryabov, 2014, riabovvv@gmail.com) version of

Tom's code taken from this discussion

https://groups.google.com/forum/#!topic/networkx-discuss/D7fMmuzVBAw

'''

TIN = scipy.spatial.Delaunay(point_graph)

edges = set()

# for each Delaunay triangle

for n in xrange(TIN.nsimplex):

# for each edge of the triangle

# sort the vertices

# (sorting avoids duplicated edges being added to the set)

# and add to the edges set

edge = sorted([TIN.vertices[n,0], TIN.vertices[n,1]])

edges.add((edge[0], edge[1]))

edge = sorted([TIN.vertices[n,0], TIN.vertices[n,2]])

edges.add((edge[0], edge[1]))

edge = sorted([TIN.vertices[n,1], TIN.vertices[n,2]])

edges.add((edge[0], edge[1]))

# make a graph based on the Delaunay triangulation edges

graph = nx.Graph(list(edges))

#add nodes attributes to the TIN graph from the original points

original_nodes = point_graph.nodes(data = True)

for n in xrange(len(original_nodes)):

XY = original_nodes[n][0] # X and Y tuple - coordinates of the original points

graph.node[n]['XY'] = XY

# add other attributes

original_attributes = original_nodes[n][1]

for i in original_attributes.iteritems(): # for tuple i = (key, value)

graph.node[n][i[0]] = i[1]

# calculate Euclidian length of edges and write it as edges attribute

if calculate_distance:

edges = graph.edges()

for i in xrange(len(edges)):

edge = edges[i]

node_1 = edge[0]

node_2 = edge[1]

x1, y1 = graph.node[node_1]['XY']

x2, y2 = graph.node[node_2]['XY']

dist = sqrt( pow( (x2 - x1), 2 ) + pow( (y2 - y1), 2 ) )

dist = round(dist, 2)

graph.edge[node_1][node_2]['distance'] = dist

# plot graph

if show:

pointIDXY = dict(zip(range(len(point_graph)), point_graph))

nx.draw(graph, pointIDXY)

plt.show()

return graph

Labels:

GIS

,

graph

,

Python

,

science

,

spatial data

Monday, September 8, 2014

Schema of the Conservation Areas in Leningradskaya Region: Some Notes for Beginner Mappers

|

| Schema of the Conservation Areas in Leningradskaya Region |

About a year ago I was asked to create a small (a B5 size) and simple schema of the conservation areas in Leningradskaya region. I did it using QGIS. Here you are the author version of the schema and several notes that might be helpful for a beginner map-maker.

There was a huge disproportion between areas of different objects and both polygon and point markers were needed to show them. I decided to use Centroid Fill option in polygon style to be able to use only one layer (polygon) instead of two (polygon and point). Using Centroid Fill makes points in centres of the small polygons overlap and mask these tiny polygons.

All the administrative borers were stored in one layer (and there are far more borders than one see here). They are drawn using rule-based symbology so I didn't even need to subset this layer to get rid of the rest of the polygons in this layer.

All the names of the surrounding countries, regions, city and the water bodies are not labels derived from layers, but labels created inside the map composer. It was quicker and their position was more precise which is crucial for such a small schema.

There was a lack of space for the legend so I had to utilise every bit of canvas to place it. I had to use 3 legend items here. One of them is actually overlapping the other and setting a transparent background for the legends was helping with that.

Finally labels for the conservation areas (numbers) were outlined with white colour to be perfectly readable. Some of them were moved manually (with storing coordinates in special columns of the layer) to prevent overlapping with other labels and data.

P.S. Don't be afraid to argue with the client about the workflow. Initially I was asked it manually digitise a 20 000 x 15 000 pixels raster map of the Leningrad region to extract the most precise borders of the conservation areas (and districts of the region). Of course I could do it and charge more money for the job, but what's the point if some of that borders are not even to be seen at this small schema? So I convinced client to use data from OSM and saved myself a lot of time.

Labels:

environment

,

GIS

,

Leningrad region

,

maps

,

OSM

,

QGIS

,

spatial data

Saturday, January 4, 2014

Unifying Extent and Resolution of Rasters Using Processing Framework in QGIS

My post about modification of extent and resolution of rasters drew quite a bit of attention and I decided to make a small New Year's present to the community and create a QGIS Processing script to automate the process.

The script was designed to be used within Processing module of QGIS. This script will make two or more rasters of your choice to have the same spatial extent and pixel resolution so you will be able to use them simultaneously in raster calculator. No interpolation will be made - new pixels will get predefined value. Here is a simple illustration of what it does:

|

| Modifications to rasters A and B |

To use my humble gift simply download this archive and unpack files from 'scripts' folder to your .../.qgis2/processing/scripts folder (or whatever folder you configured in Processing settings). At the next start of QGIS you will find a 'Unify extent and resolution' script in 'Processing Toolbox' in 'Scripts' under 'Raster processing' category:

Note that 'Help' is available:

|

| Help tab |

Lets describe parameters. 'rasters' are rasters that you want to unify. They must have the same CRS. Note that both output rasters will have the same pixel resolution as the first raster in the input.

|

| Raster selection window |

'replace No Data values with' will provide value to pixels that will be added to rasters and replace original No Data values with the value provided here. Note that in output rasters this value will not be saved as No Data value, but as a regular one. This is done to ease feature calculations that would include both of this rasters, but I'm open to suggestions and if you think that No Data attribute should be assigned I can add corresponding functionality.

Finally you mast provide a path to the folder where the output rasters will be stored in 'output directory' field. A '_unified' postfix will be added to the derived rasters file names: 'raster_1.tif' -> 'raster_1_unified.tif'

If CRSs of rasters won't match each other (and you will bypass built-in check) or an invalid pass will be provided a warning message will pop up and the execution will be cancelled:

|

| Example of the warning message |

When the execution is finished you will be notified and asked if rasters should be added to TOC:

Happy New Year!

P.S. I would like to thank Australian government for making the code they create an open source. Their kindness saved me a couple of hours.

Wednesday, September 18, 2013

Count Unique Values In Raster Using SEXTANTE and QGIS

I decided to make my script for counting unique values in raster more usable. Now you can use it via SEXTANTE in QGIS. Download script for SEXTANTE and extract archive to the folder that is is intended for your Python SEXTANTE scripts (for examlple ~./qgis2/processing/scripts). If you don't know where this folder is located go Processing -> Options and configuration -> Scripts -> Scripts folder, see the screenshot:

Now restart QGIS and in SEXTNTE Toolbox go to Scripts. You will notice new group named Raster processing. There the script named Unique values count will be located:

Launch it and you will see its dialogue window:

|

| Main window |

Note that Help is available:

Either single- or multi-band rasters are accepted for processing. After the raster is chosen (input field) one need to decide whether to round values for counting or not. If no rounding is needed - leave 'round values to ndigits' field blank. Otherwise enter needed value there. Note that negative values are accepted - this will round values to ndigits before decimal point.

When the set up is finished hit the Run button. You will get something like this:

|

| Result window |

Saturday, August 17, 2013

How to Count Unique Values in Raster

Recently I demonstrated how to get histograms for the rasters in QGIS. But what if one need to count exact number of the given value in a raster (for example for assessment of classification results)? In this case the scripting is required.

UPD: the script below was transformed in more usable SEXTANTE script, see this post.

UPD: the script below was transformed in more usable SEXTANTE script, see this post.

We'll use Python-GDAL for this task (thanks to such tutorials as this one) it is extremely easy to learn how to use it). The script I wrote seems to be not optimal (hence its working just fine and quickly so you may use it freely) due to it is not clear to me whether someone would like to use it for the floating data types (which seems to be not that feasible due to great number of unique values in this case) or such task is performed only for integers (and in this case code might be optimised). It works with single and multi band rasters.

How to use

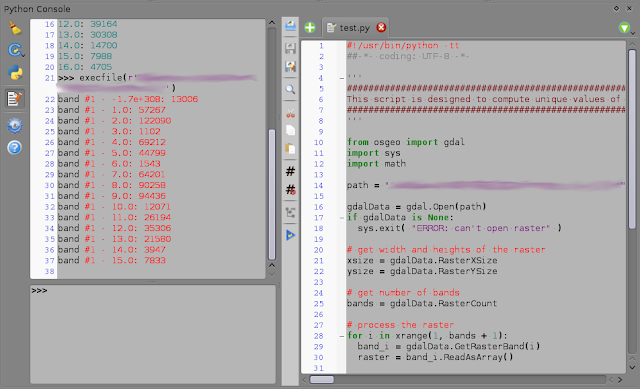

The code provided below should be copied into a text file and saved with '.py' extension. Enable Python console in QGIS, hit "Show editor" button in console and open the script file. Then replace 'raster_path' with the actual path to the raster and hit 'Run script button'. In the console output you will see sorted list of unique values of raster per band:

|

| Script body (to the right) and its output (to the left) |

Script itself

#!/usr/bin/python -tt

#-*- coding: UTF-8 -*-

'''

#####################################################################

This script is designed to compute unique values of the given raster

#####################################################################

'''

from osgeo import gdal

import sys

import math

path = "raster_path"

gdalData = gdal.Open(path)

if gdalData is None:

sys.exit( "ERROR: can't open raster" )

# get width and heights of the raster

xsize = gdalData.RasterXSize

ysize = gdalData.RasterYSize

# get number of bands

bands = gdalData.RasterCount

# process the raster

for i in xrange(1, bands + 1):

band_i = gdalData.GetRasterBand(i)

raster = band_i.ReadAsArray()

# create dictionary for unique values count

count = {}

# count unique values for the given band

for col in range( xsize ):

for row in range( ysize ):

cell_value = raster[row, col]

# check if cell_value is NaN

if math.isnan(cell_value):

cell_value = 'Null'

# add cell_value to dictionary

try:

count[cell_value] += 1

except:

count[cell_value] = 1

# print results sorted by cell_value

for key in sorted(count.iterkeys()):

print "band #%s - %s: %s" %(i, key, count[key])

Thursday, July 25, 2013

A Note About proj4 in R

It's been a long time since I had to transform some coordinates 'manually'. I mean I had a list of coordinates that I needed to [re]project. There are some nice proprietary tools for it, but who needs them when the opensource is everywhere?

Proj4 is an obvious tool for this task. And using it from within R is somewhat a natural approach when you need to process a csv-file. There is a package called... 'proj4' that provides a simple interface to proj4. It has only two functions: project and ptransform. The first one is meant to provide transition between unprojected and projected coordinate reference systems and the second - between different projected coordinate reference systems.

Look closely at description of the project function: "Projection of lat/long coordinates or its inverse". Ok... here is a huge setup which made me write this post. If you would try to use coordinates in lat/long format here you would screw up! Your points would fly thousands of miles away from initial position. Actually you have to use coordinates in long/lat format to get the result you need. Don't trust manuals ;-)

Oh, I really hate the guy who started this practice of placing longitude before latitude in GIS...

Labels:

GIS

,

R

,

spatial data

Sunday, July 7, 2013

Getting raster histogram in QGIS using SEXTANTE and R

The issue with the broken histogram creation tool in QGIS annoyed me far too long. Sometimes you just need a quick glance on the histogram of a raster just to make a decision on how to process it or just to assess distribution of classes. But as you know, QGIS histogram tool creates broken plots: they are just useless (don't know if something have changed in Master).

Fortunately there is the SEXTANTE - extremely useful plugin that provides access to hundreds of geoprocessing algorithms and allows to create your own very quickly. SEXTANTE grants access to R functions, and one of the out-of-the-box example scripts, surprise-surprise, is about raster histogram creation. "Hooray! The problem is solved!" - one may cry out. Unfortunately it's not. "Raster histogram" tool is somewhat suitable only for single-band rasters (its algorithm is as simple as hist(as.matrix(raster))). Obviously we need to create our own tool. Luckily it is extremely simple if one is already familiar with R.

To create your own R tool for QGIS go to Analisys menu and activate SEXTANTE toolbox. Then go to R-scripts -> Tools and double-click 'Create new R script' and just copy and paste this code (an explanatoin will follow the description):

UPD: now this code works for both multi-band and single-band rasters. Here is the code for QGIS 1.8.

To create your own R tool for QGIS go to Analisys menu and activate SEXTANTE toolbox. Then go to R-scripts -> Tools and double-click 'Create new R script' and just copy and paste this code (an explanatoin will follow the description):

UPD: now this code works for both multi-band and single-band rasters. Here is the code for QGIS 1.8.

##layer=raster ##Tools=group ##dens_or_hist=string hist ##showplots library('rpanel') library('rasterVis') str <- dens_or_hist if (str !='dens' & str != 'hist'){ rp.messagebox('you must enter "dens" or "hist"', title = 'oops!') } else { im <- stack(layer) test <- try(nbands(im)) if (class(test) == 'try-error') {im <- raster(layer)} if (str == 'dens') { densityplot(im) } else if (str == 'hist') { histogram(im) } }

UPD2: Here is the code for QGIS 2.0:

##layer=raster

##Raster processing=group

##dens_or_hist=string hist

##showplots

library('rpanel')

library('rasterVis')

str <- dens_or_hist

if (str !='dens' & str != 'hist'){

rp.messagebox('you must enter "dens" or "hist"', title = 'oops!')

} else {

if (nbands(layer) == 1) {

layer <- as.matrix(layer)

layer <- raster(layer)

}

if (str == 'dens') {

densityplot(layer)

} else if (str == 'hist') {

histogram(layer)

}

}

Now save the script. It will appear among other R scripts. Launch it and you will see this window:

|

| Script window |

|

| Density plot |

|

| Histogram |

|

| Fail window |

How it works.

##layer=raster provides us with the drop-down menu to select needed raster and it will be addresed as layer in our script. ##Tools=group will assign our script to the Tools group of R scripts in SEXTANTE. ##dens_or_hist=string provides us with the string input field dens or hist. ##showplots will show the plot in Results window after execution. library('rpanel') this library is needed to show pop-up window with the error when the user fails to type 4 letters correctly. library('rasterVis') this library works on top of raster package and it is responsible for plotting our graphs. Then we define condition to pop up error window via rp.messagebox if the plot type is misspelled or missing. The following piece of code is interesting:

im <- stack(layer)

test <- try(nbands(im))

if (class(test) == 'try-error') {im <- raster(layer)}

In order to correctely process the raster we need to have a RasterStack class for multi-band raster or a RasterLayer class if the raster is single-banded. 'raster' package is a bit weird with its classes so there are even different functions for determination of the number of bands for different classes and types of rasters. So the code above is weird too))) We assume that the raster is multi-banded and create a RasterStack object. Then we check whether our guess was correct: if raster is actually single-banded we will have an error if we try to use nbands() function on it. If it is the case - we will create RasterLayer object instead of RasterStack.

Finally, we transform our raster to the RasterStack object to be able to run either densityplot() or histogram() function.

Final notes

In a blink of an eye we were able to create quite handy tool to replace a long broken one. But there is the downside. This script won't be able to handle huge rasters - I wasn't able to process my IKONOS imagery where sides of the rasters exceeds 10000 pixels. Also I would not recommend to use in on a hyperspectral imagery with the dozens of bands because the process will run extremely sloooow (it will take hours and tons of RAM) and the result will be obviously unreadable.Sunday, March 31, 2013

RSAGA: Getting Started

RSAGA provides access to geocomputation capabilities of SAGA GIS from within R environment. Having SAGA GIS installed is a (quite obvious) pre-requirement to use RSAGA.

In Linux x64 sometimes additional preparations are needed. In Linux SAGA as well as other software that would like to use SAGA modules usually searches for them in /usr/lib/saga, but if your Linux is x64, they usually will be located in /usr/lib64/saga. Of course you may set up proper environmental variables, but the most lazy and overall-effective way is just to add symbolic link to /usr/lib64/saga (or whatever a correct path is) from /usr/lib/saga:

:~> sudo ln -s /usr/lib64/saga /usr/lib/sagaNow no app should miss these modules.

Wednesday, August 1, 2012

Sverdlovskaya Region, Russia: a Field Survey in Autumn. Part 2: Scars on the Earth

Ok, it's time to finish the story about land monitoring in Sverdlovskaya region. In this post I would like to demonstrate some of the most unpleasant types of the land use.

Lets begin with illegal dumping. This dump (note that there is the smoke from waste burning down) is located right next to the potato field (mmm... seems these potato are tasty). The ground was intentionally excavated here for dumping waste. Obviously this dump is exploited by the agricultural firm - owner of this land, but who cares...

|

| Panorama of freshly burnt illegal dump |

The next stop is peat cutting. A huge biotops are destroyed for no good reason (I can't agree that use of peat as an energy source is a good one). At the picture below you can see a peat cutting with the area of 1402 ha. There are dozen of them in the study area...

|

| Peat Cutting (RapidEye, natural colours) |

But the most ugly scars on the Earth surface are left from mining works. There is Asbestos town in Sverdlovskaya region. It was names after asbestos that is mined there. The quarry has an area of 1470 ha and its depth is over 400 meters. Its slag-heaps covers another 2500 ha... The irony is that this quarry gives a job for this town and killing it. You see, if you wand to dig dipper you have to make quarry wider accordingly. Current depth is 450 m and in projects it is over 900 m, but the quarry is already next to the living buildings. So quarry is going to consume the town... By the way, the local cemetery was already consumed. Guess what happened to human remains? Well, it is Russia, so they were dumped into the nearest slug-heap.

Here is the panorama of the quarry. You may try to locate BelAZ trucks down there ;-)

|

| Asbestos quarry |

Here is the part of the biggest slag-heap:

|

| A slag-heap |

That's how it looks from space:

|

| Asbestos town area (imagery - RapidEye, NIR-G-B pseudo-colour composition) |

And in the end I will show you the very basic schema of disturbed land in the study area (no settlements or roads included). Terrifying isn't it?

|

| Basic schema of disturbed land |

Labels:

disturbed land

,

environment

,

GIS

,

illegal dumps and landfills

,

Russia

,

science

,

spatial data

,

travel

,

waste

Friday, June 8, 2012

A Bit More on Ignorance

Occasionally I found an article that have some relation to my previous post. The article has an intriguing name: "The Influence of Map Design on Resource Management Decision Making". Unfortunately it is not in open access, so I wasn't able to read it. Also abstract omits conclusions... And I would prefer to see study of the real cases... Nevertheless here you are:

Abstract

The popular use of GIS and related mapping technologies has changed approaches to map-making. Cartography is no longer the domain of experts, and the potential for poorly designed maps has increased. This trend has raised concerns that poorly designed maps might mislead decision makers. Hence, an important research question is this: Can different cartographic displays of one data set so influence decision makers as to alter a decision that relies on the mapped data? This question was studied using a spatial decision problem typical for decision makers in a resource management agency in the United States (the USDA Forest Service). Cartographic display was varied by constructing three hypothetical map treatments from the same data set. Map treatments and other test materials were mailed to Forest Service District Rangers. All District Rangers received the same decision problem, but each received only one of the three map treatments. The participants were asked to make a decision using the decision problem and map treatment. Information about the decision and the influence of each map treatment was obtained through a questionnaire. The research and its implications for map-based decision making are presented and discussed.

Labels:

GIS

,

science

,

spatial data

Tuesday, April 10, 2012

MOSKitt Geo: a Tool for Spatial Database Design

I needed to create design for a spatial database and found out that despite of availability of the tools for a regular database design, there is a lack of such tools for a spatial database design.

Fortunately there is a suitable free cross-platform tool - MOSKitt Geo, which allows to design spatial databases for PostGIS and Oracle. MOSKitt is not quite user-friendly and if you are not an experienced software designer, you will need to read the f*** manual.

Installation

- Download MOSKitt itself and extract it (installation is not required). Note that x86_64 version (and version for MAC) is available for 1.3.2 and earlier releases (current is 1.3.8).

- Install MOSKitt Geo plugin using instructions from here. Note that for 3-rd step in instruction you may also need to type something and erase it in the search area of the dialogue to make list of plug-ins appear (at least I needed to). And one more thing - MOSKitt Geo repository is already there, so you don't need to add it - just choose it from the drop-down menu.

PostgreSQL/PostGIS database connection and reverse engineering

To connect to existing database go Window->Show View->Other->Data Management->Data Source Explorer

A new tab will occur:

Right click on Database Connections and choose New->PostgreSQL. You will see a dialogue for the DB connection settings. Note that there might be no PostgreSQL JBC Driver defined. In this case first download needed driver and then click on the icon to the right from the driver drop-down menu (New Driver Definition).

|

| Connection settings |

In the Edit Driver Definition dialogue provide path to the driver and click Ok.

|

| Driver definition dialogue |

When database connection established, right-click on it and choose Reverse Engenieering.

Labels:

GIS

,

MOSKitt Geo

,

PostGIS

Sunday, March 11, 2012

QGIS and GDAL>=1.9 Encoding Issue: a Workaround

The cause of the issue is described here (Rus). Briefly: GDAL>=1.9 attemts to re-encode the .dbf-file to UTF-8 on the basis of the LDID (Language Driver ID) written in .dbf header. But unfortunately LDID is usually missing, and in particular QGIS does not write it to the .dbf-file it creates. In case when LDID is missing, GDAL>=1.9 assumes that encoding of the .dbf-file is ISO8859_1 (Latin-1) which makes non-Latin characters unreadable.

The workaround I'm currently using is creating additional .cpg-file, that contains the ID of the encoding used. For example if encoding is Windows-1251, .cpg-file contains the following record: "1251" (without quotes). When .cpg-file is present, GDAL>=1.9 + QGIS works just fine.

UPD: on some OS you will need to use ID from Additional ID column instead of Encoding ID column.

UPD3: There is another workaround. You can open .dbf-file in Libre Office Calc (Open Office Calc) providing encoding needed and save it from there. This will write necessary header to .dbf-file and QGIS will open attributes correctly. Note that this also will make fields names written in upper case.

UPD4: there is a plugin for encoding fixing available.

Here you are a table of the encoding IDs (taken from here):

| Encoding ID | Encodind name | Additional ID | Other names |

| 1252 | Western | iso-8859-1 except when 128-159 is used, use "Windows-1252" |

iso8859-1, iso_8859-1, iso-8859-1, ANSI_X3.4-1968, iso-ir-6, ANSI_X3.4-1986, ISO_646, irv:1991, ISO646-US, us, IBM367, cp367, csASCII, latin1, iso_8859-1:1987, iso-ir-100, ibm819, cp819, Windows-1252 |

| 20105 | us-ascii | us-acii, ascii | |

| 28592 | Central European (ISO) | iso-8859-2 | iso8859-2, iso-8859-2, iso_8859-2, latin2, iso_8859-2:1987, iso-ir-101, l2, csISOLatin2 |

| 1250 | Central European (Windows) | Windows-1250 | Windows-1250, x-cp1250 |

| 1251 | Cyrillic (Windows) | Windows-1251 | Windows-1251, x-cp1251 |

| 1253 | Greek (Windows) | Windows-1253 | Windows-1253 |

| 1254 | Turkish (Windows) | Windows-1254 | Windows-1254 |

| 932 | Japanese (Shift-JIS) | shift_jis | shift_jis, x-sjis, ms_Kanji, csShiftJIS, x-ms-cp932 |

| 51932 | Japanese (EUC) | x-euc-jp | Extended_UNIX_Code_Packed_Format_for_Japanese, csEUCPkdFmtJapanese, x-euc-jp, x-euc |

| 50220 | Japanese (JIS) | iso-2022-jp | csISO2022JP, iso-2022-jp |

| 1257 | Baltic (Windows) | Windows-1257 | windows-1257 |

| 950 | Traditional Chinese (BIG5) | big5 | big5, csbig5, x-x-big5 |

| 936 | Simplified Chinese (GB2312) | gb2312 | GB_2312-80, iso-ir-58, chinese, csISO58GB231280, csGB2312, gb2312 |

| 20866 | Cyrillic (KOI8-R) | koi8-r | csKOI8R, koi8-r |

| 949 | Korean (KSC5601) | ks_c_5601 | ks_c_5601, ks_c_5601-1987, korean, csKSC56011987 |

| 1255 (logical) | Hebrew (ISO-logical) | Windows-1255 | iso-8859-8i |

| 1255 (visual) | Hebrew (ISO-Visual) | iso-8859-8 | ISO-8859-8 Visual, ISO-8859-8 , ISO_8859-8, visual |

| 862 | Hebrew (DOS) | dos-862 | dos-862 |

| 1256 | Arabic (Windows) | Windows-1256 | Windows-1256 |

| 720 | Arabic (DOS) | dos-720 | dos-720 |

| 874 | Thai | Windows-874 | Windows-874 |

| 1258 | Vietnamese | Windows-1258 | Windows-1258 |

| 65001 | Unicode UTF-8 | UTF-8 | UTF-8, unicode-1-1-utf-8, unicode-2-0-utf-8 |

| 65000 | Unicode UTF-7 | UNICODE-1-1-UTF-7 | utf-7, UNICODE-1-1-UTF-7, csUnicode11UTF7, utf-7 |

| 50225 | Korean (ISO) | ISO-2022-KR | ISO-2022-KR, csISO2022KR |

| 52936 | Simplified Chinese (HZ) | HZ-GB-2312 | HZ-GB-2312 |

| 28594 | Baltic (ISO) | iso-8869-4 | ISO_8859-4:1988, iso-ir-110, ISO_8859-4, ISO-8859-4, latin4, l4, csISOLatin4 |

| 28585 | Cyrillic (ISO) | iso_8859-5 | ISO_8859-5:1988, iso-ir-144, ISO_8859-5, ISO-8859-5, cyrillic, csISOLatinCyrillic, csISOLatin5 |

| 28597 | Greek (ISO) | iso-8859-7 | ISO_8859-7:1987, iso-ir-126, ISO_8859-7, ISO-8859-7, ELOT_928, ECMA-118, greek, greek8, csISOLatinGreek |

| 28599 | Turkish (ISO) | iso-8859-9 | ISO_8859-9:1989, iso-ir-148, ISO_8859-9, ISO-8859-9, latin5, l5, csISOLatin5 |

Subscribe to:

Posts

(

Atom

)